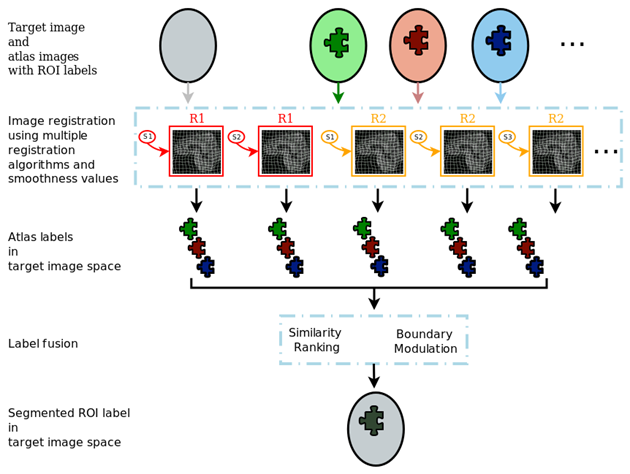

MUSE generates a large ensemble of candidate labels in the target image space using multiple atlases, registration algorithms and smoothness values for these algorithms. The ensemble is then fused into a final segmentation.

An illustration of the MUSE algorithm is given in the following figure.

Please cite our work in [NeuroImage2015] when referencing MUSE in your research

GITHUB: https://github.com/CBICA/MUSE

NITRC: https://www.nitrc.org/projects/cbica_muse/

Inter-scanner variability presents challenges in pooling data from multiple centers, but it also presents an opportunity for image harmonization, the process of removing the systematic differences that exist in multi-center datasets via statistical methods.

CBICA has developed a statistically-based harmonization technique for pooling large neuroimaging datasets across the lifespan. The method, called ComBat+GAM, was recently published in Neuroimage (https://doi.org/10.1016/j.neuroimage.2019.116450) and utilizes generalized additive models (GAMs) to estimate robust age trends in structural brain measurements, while simultaneously correcting for differences among datasets.

As the medical imaging research community has witnessed a rapid growth of interest in acquiring and analyzing large multi-center datasets, one obstacle remaining in multi-center collaboration is the issue of inter-scanner variability. Systematic differences between images acquired from different scanners are inevitable, and harmonization is the process of removing these systematic differences via statistical methods. ComBat+GAM is an extension of an algorithm originally developed for batch-effect correction in genomics (https://doi.org/10.1093/biostatistics/kxj037) while similar adaptations have been published for diffusion tensor imaging data (https://doi.org/10.1016/j.neuroimage.2017.08.047), cortical thickness measurements (https://doi.org/10.1016/j.neuroimage.2017.11.024), and functional connectivity matrices from resting-state fMRI scans (https://doi.org/10.1002/hbm.24241).

The continued development of image harmonization techniques is critical for the efficacy of future imaging studies. Such techniques enable multiple research centers to collaborate and derive greater power from their results than when working independently. Given the high economic costs of imaging, multi-center collaboration is the most feasible way to acquire large imaging datasets. Current harmonization methods show strong promise of enabling multi-center collaborations across the medical imaging community.

MRI derived Brain Age has been widely adopted by the neuroscience community as an informative biomarker of brain health at the individual level. Individuals displaying pathologic or atypical brain development and aging patterns can be identified through positive or negative deviations from typical Brain Age trajectories.

Normal brain development and aging are accompanied by patterns of neuroanatomical change that can be captured by machine learning methods applied to imaging data. MRI-derived brain age has been widely adopted by the neuroscience community as an informative biomarker of brain health at the individual level. Individuals displaying pathologic or atypical brain development and aging patterns can be identified through positive or negative deviations from typical Brain Age trajectories. For example, Schizophrenia, Mild Cognitive Impairment, Alzheimer’s Disease, Type 2 Diabetes, and mortality have all been linked to accelerated brain aging.

Deep learning has emerged as a powerful tool in medical image analysis, allowing for highly complex relationships to be modeled with minimal feature engineering. Since deep learning methods require large amounts of data in order achieve good results these methods have been slow to enter into neuroimaging research, where data is relatively limited. Recently, through large multi-study pools of imaging data, we are able to apply these methods.

Our large consortium of neuroimaging data represents a diverse set of individuals spanning different ages, geographic locations, and scanners. This allows us to train highly accurate and reliable models for predicting brain age.

In 2020, we published a paper titled “MRI signatures of brain age and disease over lifespan from a Deep Brain Network and 14,468 people”, in Brain. This paper represents the culmination of our findings related to the application of deep learning in identifying brain age and other neuroanatomical biomarkers from raw brain scans. In this paper, we present a deep learning model that has learned highly generalizable neuroimaging features. We further demonstrate that these features can be used to construct various predictive models for other related tasks such as Alzheimer’s Disease classification, via transfer learning.

Classification of Morphological Patterns using Aadaptive Regional Elements

Classification Of Morphological Patterns using Adaptive Regional Elements

COMPARE is a method for classification of structural brain magnetic resonance (MR) images, which is a combination of deformation-based morphometry and machine learning methods. Before running classification, a morphological representation of the anatomy of interest is obtained from structural MR brain images using a high-dimensional mass-preserving template warping method [1, 2]. Regions that display strong correlations between tissue volumes and classification (clinical) variables learned from training samples are extracted using a watershed segmentation algorithm. To achieve robustness to outliers, the regional smoothness of the correlation map is estimated by a cross-validation strategy. A volume increment algorithm is then applied to these regions to extract regional volumetric features. To improve efficiency and generalization ability of the classification, a feature selection technique using Support Vector Machine-based criteria is used to select the most discriminative features, according to their effect on the upper bound of the leave-one-out generalization error. Finally, SVM-based classification is applied using the best set of features, and it is tested using a leave-one-out cross-validation strategy. Although the algorithm is designed for structural brain image classification, it is readily applicable for functional brain image classification with proper feature images.

(For more details visit COMPARE’s webpage)

Generative-Discriminative Basis Learning (GONDOLA)

This software implements Generative-Discriminative Basis Learning (GONDOLA), which is explained in detail in [TMI2012] and extended later on in [MICCAI2011]. Theoretical ideas are explained in these papers, but as a brief explanation, GONDOLA provides a generative method to reduce the dimensionality of medical images while using class labels. It produces basis vectors that are useful for classification and also clinically interpretable.

When provided with two sets of labeled images as input, the software outputs features saved in the Weka Attribute-Relation File Format (ARFF) and a MATLAB data file. The program can also save basis vectors as NIfTI-1 images. Scripts are provided to find and build an optimal classifier using Weka. The software can also be used for semi-supervised cases in which a number of subjects do not have class labels (for an example, please see [TMI2012]).

Please cite [TMI2012] if any results where produced with the help of GONDOLA.

(For more details visit GONDOLA’s webpage)

Semi-supervised clustering via GAN

SmileGAN is a semi-supervised clustering method which is designed to identify disease-related heterogeneity within a patient group. In particular, many diseases and disorders are highly heterogeneous, with no single imaging signature associated with them. Identifying subtypes has been of increasing interest in neuroscience. The SmileGAN model by construction seeks multiple imaging signatures, while avoiding the common pitfall of standard clustering methods, i.e. finding variations that are unrelated to the disease and only relate to other confounding factors. This is achieved through a GAN-based formulation that simultaneously seeks a number of (regularized) transformations from healthy controls to patients, rather than clustering patients directly, in addition to an inverse mapping that ensures that the subtype/cluster of an individual can be correctly estimated from her/his scans.

(For more details visit https://pypi.org/project/SmileGAN/)

NiChart

NiChart (Neuro imaging Chart) is a software that provides a comprehensive solution for analyzing standard structural and functional brain MRI data across studies. It offers a range of features including computational morphometry, functional signal analysis, quality control, statistical harmonization, data standardization, interactive visualization, and extraction of expressive imaging signatures.

For more details, you can visit the NiChart website.